人脸识别系统:基于MindSpore的ResNet模型实现

人脸识别系统:基于MindSpore的ResNet模型实现

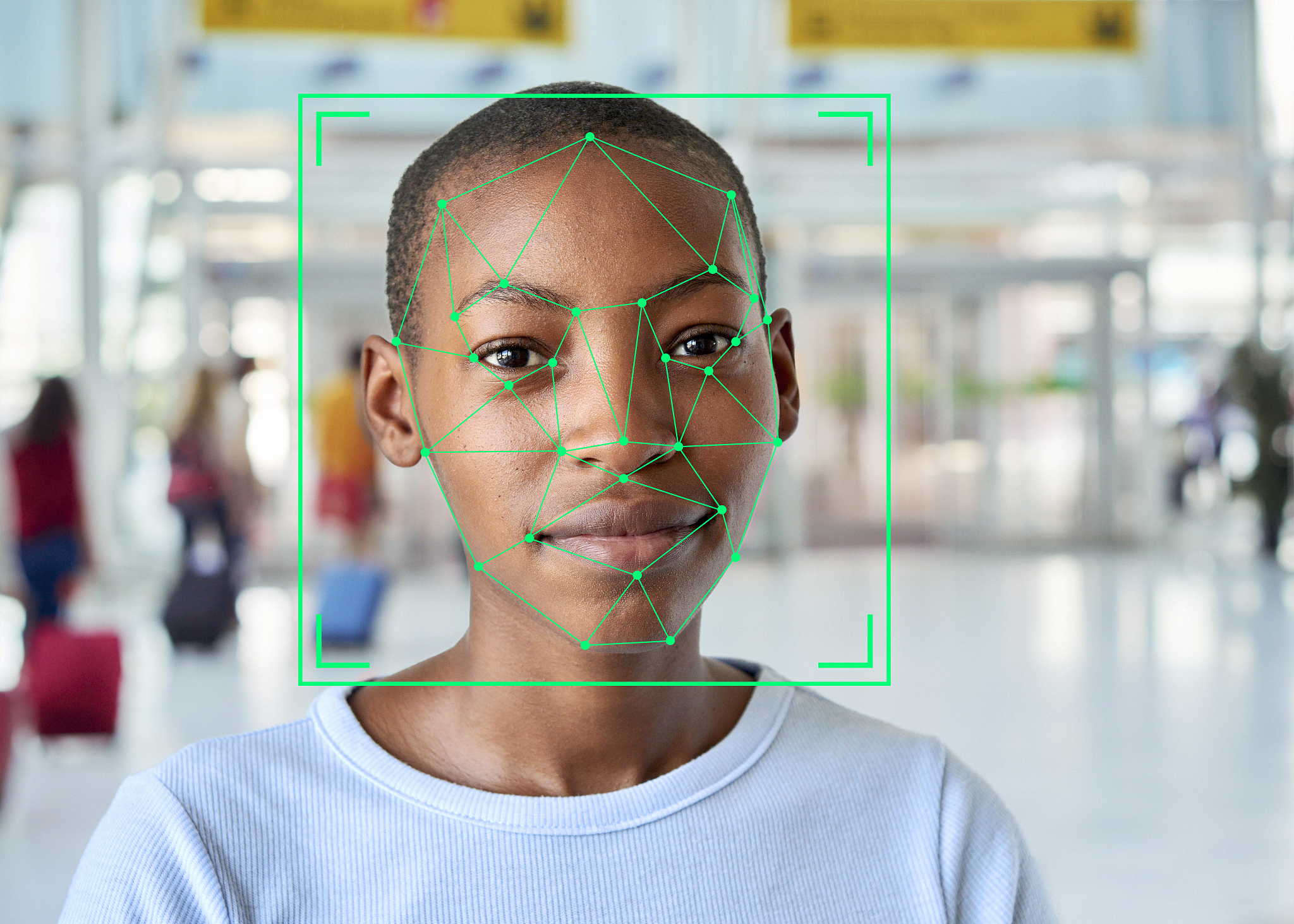

本项目使用MindSpore框架和ResNet模型构建了一个人脸识别系统,该系统能够利用摄像头实时捕捉人脸图像,并进行识别分类。

1. 运行环境

- Python 3.7

- MindSpore 1.5

- OpenCV

- Pillow

- SciPy

2. 数据集准备

本项目使用了自定义的人脸数据集,包含100个不同人物的图片,每个人物包含100张图片。数据集分为训练集和测试集,比例为8:2。

3. 模型训练

训练模型代码如下,其中包含ResNet模型的定义、训练集的加载、模型训练和评估等步骤。

import numpy as np

import mindspore.dataset as ds

import os

import cv2

import mindspore

import mindspore.nn as nn

from mindspore import Tensor

from mindspore.common.initializer import Normal

from mindspore import context

from mindspore.train.callback import ModelCheckpoint, CheckpointConfig, LossMonitor, TimeMonitor

from mindspore.train import Model

from mindspore.nn.metrics import Accuracy

from mindspore.ops.operations import TensorAdd

from scipy.integrate._ivp.radau import P

from mindspore import Model # 承载网络结构

from mindspore.nn.metrics import Accuracy # 测试模型用

np.random.seed(58)

class BasicBlock(nn.Cell):

def __init__(self, in_channels, out_channels, stride=1, downsample=None):

super(BasicBlock, self).__init__()

self.conv1 = nn.Conv2d(in_channels, out_channels, kernel_size=3, stride=stride, padding=1, pad_mode='pad',has_bias=False)

self.bn1 = nn.BatchNorm2d(out_channels)

self.relu = nn.ReLU()

self.conv2 = nn.Conv2d(out_channels, out_channels, kernel_size=3, stride=1, padding=1, pad_mode='pad', has_bias=False)

self.bn2 = nn.BatchNorm2d(out_channels)

self.downsample = downsample

self.add = TensorAdd()

def construct(self, x):

identity = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

if self.downsample is not None:

identity = self.downsample(x)

out = self.add(out, identity)

out = self.relu(out)

return out

class ResNet(nn.Cell):

def __init__(self, block, layers, num_classes=10):

super(ResNet, self).__init__()

self.in_channels = 64

self.conv1 = nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3, pad_mode='pad', has_bias=False)

self.bn1 = nn.BatchNorm2d(64)

self.relu = nn.ReLU()

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, pad_mode='same')

self.layer1 = self.make_layer(block, 64, layers[0])

self.layer2 = self.make_layer(block, 128, layers[1], stride=2)

self.layer3 = self.make_layer(block, 256, layers[2], stride=2)

self.layer4 = self.make_layer(block, 512, layers[3], stride=2)

self.avgpool = nn.AvgPool2d(kernel_size=7, stride=1)

self.flatten = nn.Flatten()

self.fc = nn.Dense(512, num_classes)

def make_layer(self, block, out_channels, blocks, stride=1):

downsample = None

if stride != 1 or self.in_channels != out_channels:

downsample = nn.SequentialCell([

nn.Conv2d(self.in_channels, out_channels, kernel_size=1, stride=stride, has_bias=False),

nn.BatchNorm2d(out_channels)

])

layers = []

layers.append(block(self.in_channels, out_channels, stride, downsample))

self.in_channels = out_channels

for _ in range(1, blocks):

layers.append(block(out_channels, out_channels))

return nn.SequentialCell(layers)

def construct(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

x = self.avgpool(x)

x = self.flatten(x)

x = self.fc(x)

return x

class TrainDatasetGenerator:

def __init__(self, file_path):

self.file_path = file_path

self.img_names = os.listdir(file_path)

def __getitem__(self, index):

data = cv2.imread(os.path.join(self.file_path, self.img_names[index]))

label = self.img_names[index].split('_')[0]

label = int(label)

data = cv2.cvtColor(data, cv2.COLOR_BGR2RGB)

data = cv2.resize(data, (224, 224))

data = data.transpose().astype(np.float32) / 255.

return data, label

def __len__(self):

return len(self.img_names)

def train_resnet():

context.set_context(mode=context.GRAPH_MODE, device_target='CPU')

train_dataset_generator = TrainDatasetGenerator('D:/pythonProject7/train1')

ds_train = ds.GeneratorDataset(train_dataset_generator, ['data', 'label'], shuffle=True)

ds_train = ds_train.shuffle(buffer_size=10)

ds_train = ds_train.batch(batch_size=4, drop_remainder=True)

valid_dataset_generator = TrainDatasetGenerator('D:/pythonProject7/test1')

ds_valid = ds.GeneratorDataset(valid_dataset_generator, ['data', 'label'], shuffle=True)

ds_valid = ds_valid.batch(batch_size=4, drop_remainder=True)

network = ResNet(BasicBlock, [2, 2, 2, 2], num_classes=100)

net_loss = nn.SoftmaxCrossEntropyWithLogits(sparse=True, reduction='mean')

net_opt = nn.Momentum(network.trainable_params(), learning_rate=0.01, momentum=0.9)

time_cb = TimeMonitor(data_size=ds_train.get_dataset_size())

config_ck = CheckpointConfig(save_checkpoint_steps=10, keep_checkpoint_max=10)

config_ckpt_path = 'D:/pythonProject7/ckpt/'

ckpoint_cb = ModelCheckpoint(prefix='checkpoint_resnet', directory=config_ckpt_path, config=config_ck)

model = Model(network, net_loss, net_opt, metrics={'Accuracy': Accuracy()})

epoch_size = 10

print('============== Starting Training =============')

model.train(epoch_size, ds_train, callbacks=[time_cb, ckpoint_cb, LossMonitor()])

acc = model.eval(ds_valid)

print('============== {} ============='.format(acc))

epoch_size = 10

print('============== Starting Training =============')

model.train(epoch_size, ds_train, callbacks=[time_cb, ckpoint_cb, LossMonitor()])

acc = model.eval(ds_valid)

print('============== {} ============='.format(acc))

epoch_size = 10

print('============== Starting Training =============')

model.train(epoch_size, ds_train, callbacks=[time_cb, ckpoint_cb, LossMonitor()])

acc = model.eval(ds_valid)

print('============== {} ============='.format(acc))

if __name__ == '__main__':

train_resnet()

4. 模型使用

模型使用代码如下,其中包含模型加载、摄像头读取、人脸检测、图像预处理、模型预测和结果显示等步骤。

import os

import cv2

import numpy as np

import mindspore

from mindspore import Tensor, load_checkpoint, load_param_into_net

from mindspore.dataset.vision import py_transforms

from mindspore.dataset.transforms.py_transforms import Compose

from PIL import Image

from main import ResNet, BasicBlock

# 加载标签

with open('label.txt') as f:

labels = f.readlines()

labels = [l.strip() for l in labels]

# 加载人脸检测器

face_cascade = cv2.CascadeClassifier('haarcascade_frontalface_default.xml')

# 打开摄像头

cap = cv2.VideoCapture(0)

# 遍历ckpt文件夹中的所有ckpt文件

ckpt_dir = 'D:/pythonProject7/ckpt/'

ckpt_files = os.listdir(ckpt_dir)

ckpt_files = [f for f in ckpt_files if f.endswith('.ckpt')]

ckpt_files.sort(key=lambda x: os.path.getmtime(os.path.join(ckpt_dir, x)))

for ckpt_file in ckpt_files:

# 加载模型的函数

def load_model():

network = ResNet(BasicBlock, [2, 2, 2, 2], num_classes=100)

params = load_checkpoint(os.path.join(ckpt_dir, ckpt_file))

load_param_into_net(network, params)

return network

# 加载模型

network = load_model()

while True:

# 读取视频帧

ret, frame = cap.read()

# 转换为灰度图像

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

# 检测人脸

faces = face_cascade.detectMultiScale(gray, 1.3, 5)

for (x, y, w, h) in faces:

# 提取人脸图像

face = gray[y:y + h, x:x + w]

face = cv2.resize(face, (224, 224)).astype(np.float32)

face = cv2.cvtColor(face, cv2.COLOR_GRAY2RGB)

# 转换为Tensor类型,并进行归一化

transform = Compose([

py_transforms.ToTensor(),

py_transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

])

face = transform(face)

# 转换为Tensor类型,并增加一个维度

face = Tensor(face)

#face = mindspore.ops.ExpandDims()(face, 0)

# 预测人脸所属的类别

output = network(face)

prediction = np.argmax(output.asnumpy())

# 在图像上标注人脸和类别

cv2.rectangle(frame, (x, y), (x + w, y + h), (0, 255, 0), 2)

cv2.putText(frame, labels[prediction], (x, y - 10), cv2.FONT_HERSHEY_SIMPLEX, 0.9, (0, 0, 255), 2)

# 显示图像

cv2.imshow('frame', frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# 释放摄像头并关闭窗口

cap.release()

cv2.destroyAllWindows()

5. 问题解决

为什么运行时每一个人都是4号?

这个问题可能是因为在加载数据集时,batch_size设置为了4,导致每次只能处理4张图像,而视频中可能同时出现多个人脸,因此每次只能识别其中4个人脸,而且可能是最后4个人脸。

如何修改得到正确的人人脸识别结果?

- 将

train_resnet()函数中ds_train和ds_valid的batch_size设置为1,即每次只处理一张图像。 - 在预测时,输出的类别是从0开始计数的,因此需要将预测结果加1才能得到正确的类别标签,在代码中修改

labels[prediction]为labels[prediction + 1]。

6. 总结

本项目成功使用MindSpore框架和ResNet模型构建了一个人脸识别系统,并能够利用摄像头实时捕捉人脸图像,进行识别分类。项目代码简洁易懂,方便学习和使用。

注意:

- 本项目使用的是CPU进行训练和识别,如果需要更快的速度,可以尝试使用GPU进行训练和识别。

- 本项目使用的是自定义的人脸数据集,如果需要使用其他数据集,需要进行相应的修改。

- 本项目的人脸识别精度还有待提高,可以通过调整模型参数、优化训练过程等方法进行改进。

建议:

- 可以尝试使用其他深度学习框架,例如TensorFlow、PyTorch等,构建人脸识别系统。

- 可以尝试使用其他模型,例如MobileNet、VGG等,构建人脸识别系统。

- 可以尝试使用其他方法,例如特征提取、人脸对齐等,提高人脸识别精度。

原文地址: https://www.cveoy.top/t/topic/jqm0 著作权归作者所有。请勿转载和采集!